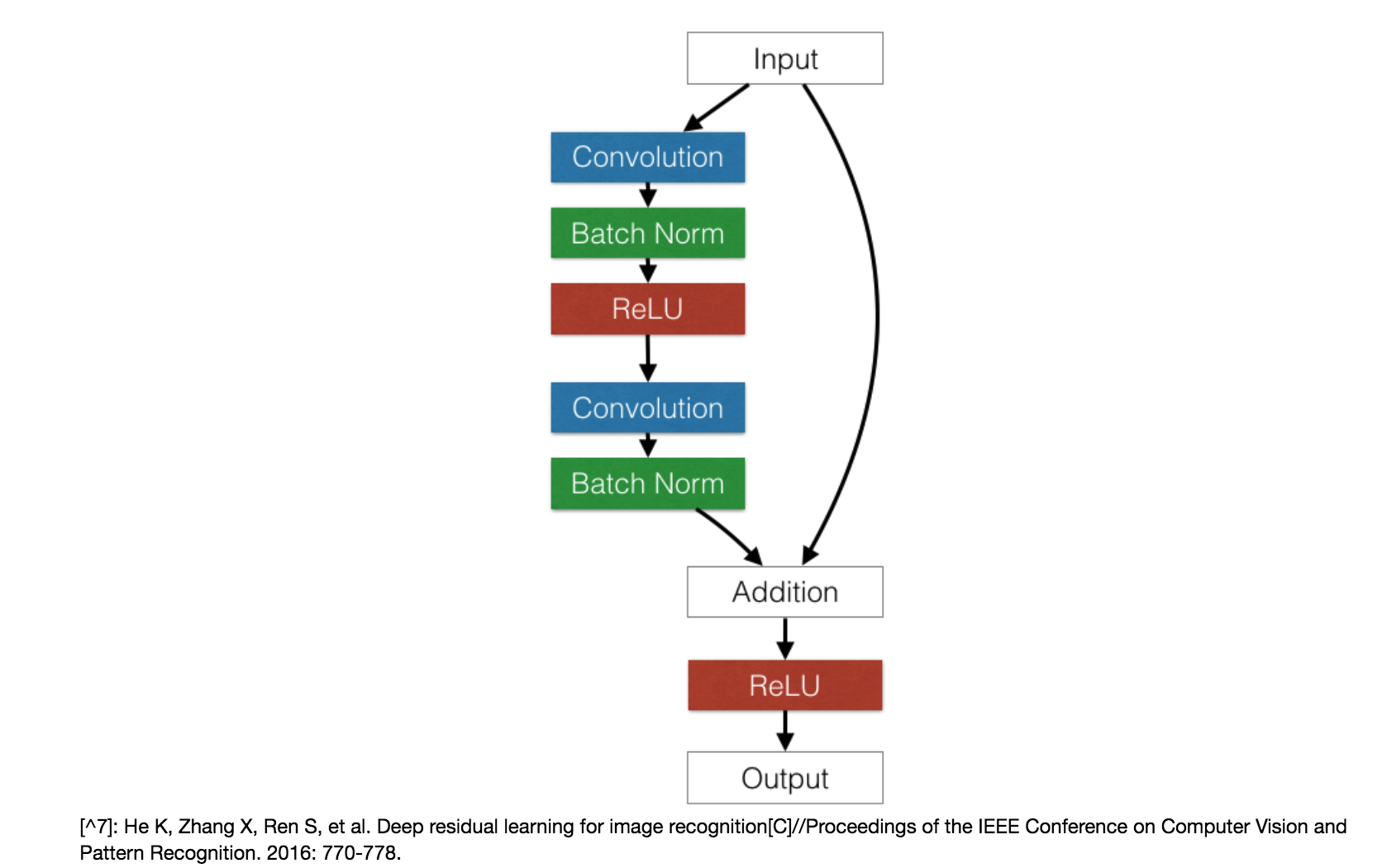

Residual block和layer出现了多次,故将Residual block实现为一个子Module,layer实现为子函数

结合使用了nn.Module、nn.functional,尽量使用nn.Sequential

每个Residual block都有一个shortcut, 如果其和主干卷积网络的输入输出通道不一致或步长不为1时, 需要有专门单元将二者转成一致才可以相加

本程序为ResNet实现,实际使用可直接调用torchvision.medels接口,其实现了大部分model

import torch as t

from torch import nn

from torch.nn import functional as F

# 本程序为ResNet实现,实际使用可直接调用torchvision接口,其实现了大部分model

# 使用方式:

# from torchvision import models

# model = models.resnet34()

class ResidualBlock(nn.Module):

"""

实现子module:ResidualBlock

用子module来实现residual block,在_make_layer()中调用

"""

def __init__(self, inchannel, outchannel, stride=1, shortcut=None):

# 继承时需要使用 super(FooChild,self) ,

# 首先找到 FooChild 的父类(就是类 FooParent),然后把类 FooChild 的对象转换为类 FooParent 的对象

super(ResidualBlock, self).__init__()

self.left = nn.Sequential(

nn.Conv2d(inchannel, outchannel, 3, stride, 1, bias=False), # 只在第一个卷积层进行通道变换

nn.BatchNorm2d(outchannel),

nn.ReLU(inplace=True),

nn.Conv2d(outchannel, outchannel, 3, 1, 1, bias=False),

nn.BatchNorm2d(outchannel))

self.right = shortcut

def forward(self, x):

out = self.left(x) # x 经过网络分支

residual = x if self.right is None else self.right(x)

out += residual

return F.relu(out)

class ResNet(nn.Module):

'''

实现主module:ResNet34

ResNet34 包含多个layer,每个layer又包含多个residual block

用_make_layer函数来实现layer,用子module来实现residual block

'''

def __init__(self, num_classes=1000): # 定义了分类数

super(ResNet, self).__init__()

""" 前几层网络 """

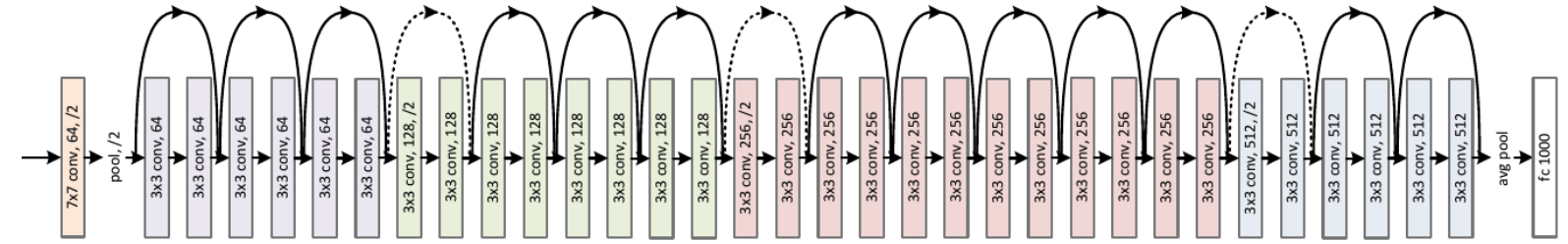

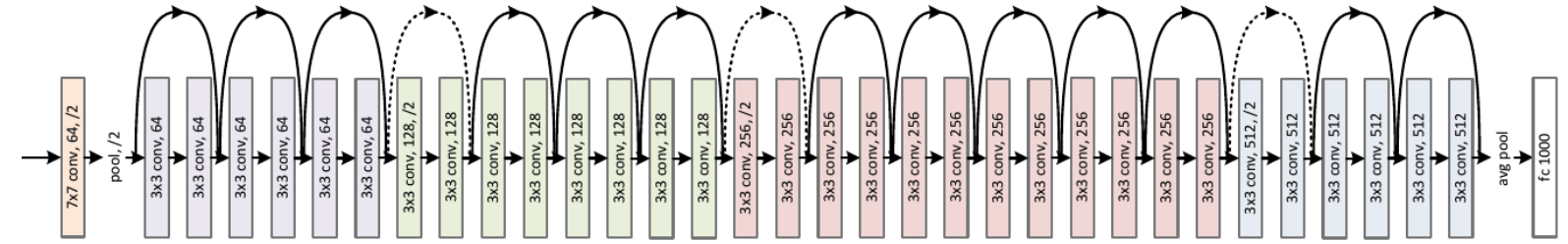

self.pre = nn.Sequential(

nn.Conv2d(3, 64, 7, 2, 3, bias=False),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.MaxPool2d(3, 2, 1))

""" 重复的layer,分别有3、4、6、3个Residual block"""

self.layer1 = self._make_layer(64, 64, 3, 1)

self.layer2 = self._make_layer(64, 128, 4, 2)

self.layer3 = self._make_layer(128, 256, 5, 2)

self.layer4 = self._make_layer(256, 512, 3, 2)

""" 分类用的全连接层 """

self.fc = nn.Linear(512, num_classes)

def _make_layer(self, inchannel, outchannel, block_num, stride=1):

"""

构建 layer, 包含多个Residual block

"""

shortcut = nn.Sequential(

nn.Conv2d(inchannel, outchannel, 1, stride, bias=False), # kernel size=1

nn.BatchNorm2d(outchannel))

layers = []

# 第一层个Residual Block,需要改变通道数, 使用1*1的卷积核来进行通道数改变

layers.append(ResidualBlock(inchannel, outchannel, stride, shortcut))

# 剩下的Residual Block

for i in range(1, block_num):

layers.append(ResidualBlock(outchannel, outchannel))

return nn.Sequential(*layers)

def forward(self, x):

"""

网络结构构建

"""

x = self.pre(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = F.avg_pool2d(x, 7)

x = x.view(x.size(0), -1)

return self.fc(x)

model = ResNet()

input = t.randn(1,3,224,224)

print(input)

o = model(input)

print(o)